~ 18 min read

Are We Facing a Bigger Monster? The Journal of Trial and Error Visits Cambridge

ByMartijn van der MeerOrcID & Max Bautista PerpinyaOrcID

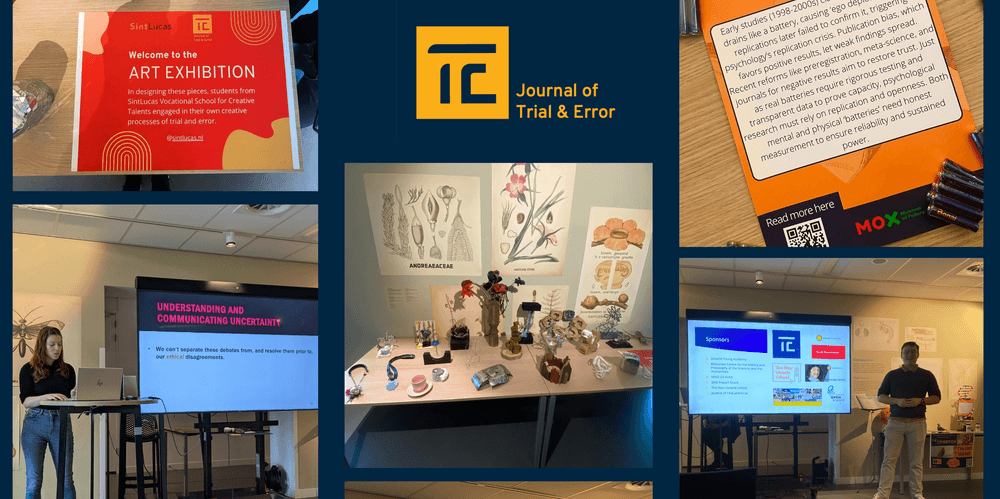

As fall hits, we now look back and reflect about the beginning of summer, when all was excitement, when all the work of the previous year was put into public action, and our voices were being heard at several places in Europe.

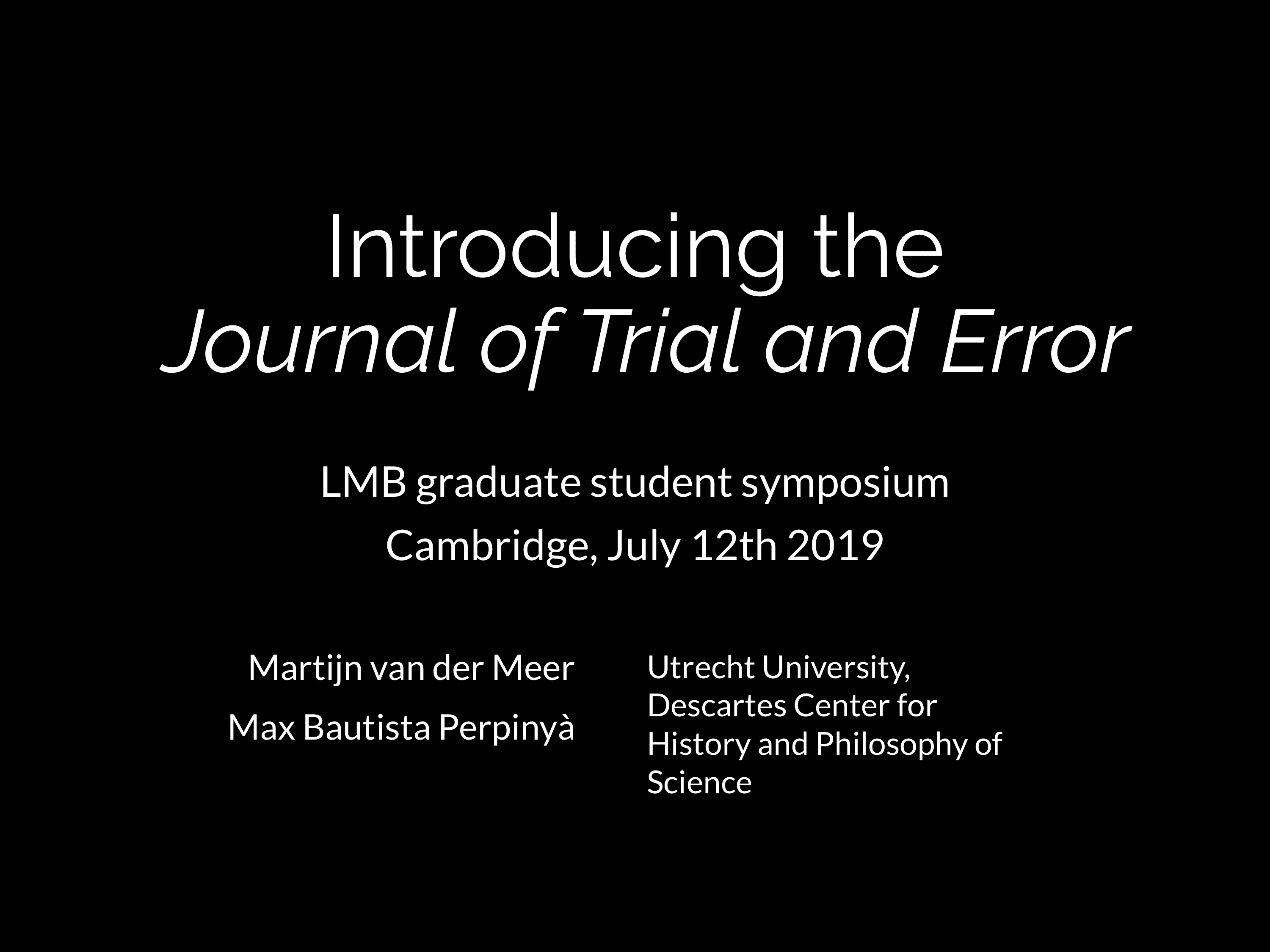

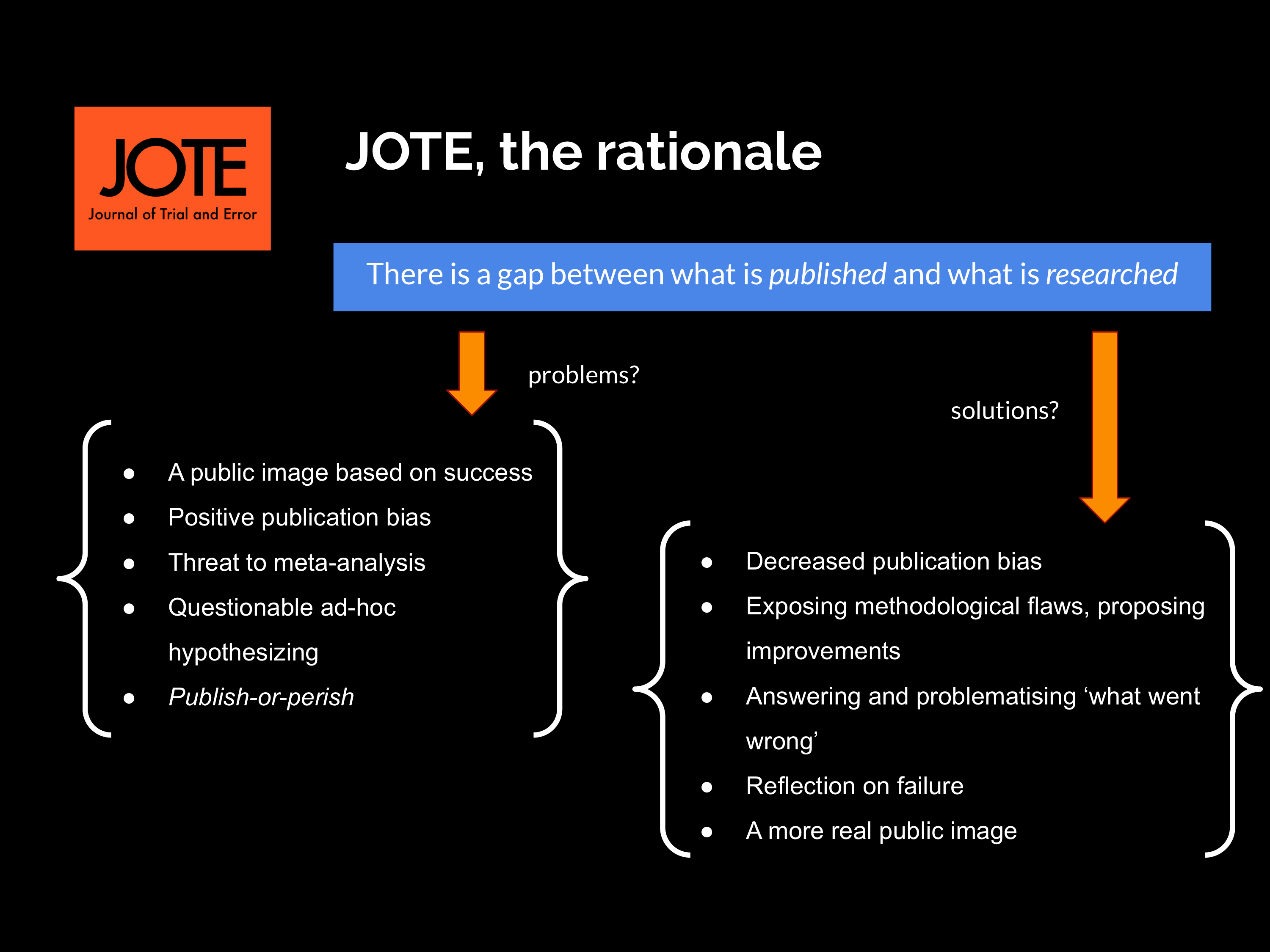

This piece deals with something specific: our experience of presenting the Journal of Trial and Error in Cambridge at the Laboratory of Molecular Biology (LMB) Annual Graduate Student Symposium. In writing this, we hope to put some order to the information that we got from talking to scientists, as a form of take-home message, but also as a grander reflection on what we experience as key dynamic movements, and uncomfortable knots in the practice of science and the publishing of articles.

The story that was told

On July 10th, we arrived late at Cambridge train station, the sun had set, and the twilight truly gave us the sense of being in a town of tradition and history. It all was quiet, the few pubs and nightclubs seemed just a small obligated addition, the wandering tourists just a recent blister for the locals — what we felt was Cambridge’s essence was the intellectual brewing happening behind walls and the throwing of young minds into books and lab benches.

The conference started, and we wore our name-tags proudly and clowny. We, as a pure historian (Martijn), and a neuroscientist in the process of turning historian (Max), felt like personified anomalies of science: being in Cambridge, at the LMB research department (host of no less than twelve Nobel prize awards), at a structural and molecular biology symposium — and yes, we went there to talk about failure. As our manifesto hopefully makes clear: we didn’t and still don’t mean ‘failure’ in any demeaning sense. We are interested in the role of making mistakes at the bench and on the white board, and we think that errors are intrinsic and productive. This was the story we tried to tell.

After listening to talks, which Max could understand about 60% and Martijn about 10%, about hardcore cutting-edge biomolecular science, we intended to start our talk by doing what those reflecting on science can do best: ask annoying questions. With a first slide containing one simple sentence – “What is science?” – success was guaranteed. The audience, full of mainly PhD students from Cambridge, answered by sophisticated shouts about an “experimental method”, a “certain scientific scrutiny”, and “systematic observation”. Method, as became clear from the answers, demarcated real science. But what, then, is this method specifically?

From a historical point of view, a possible answer lies with those who first wrote explicitly about this methodological scrutiny. While being at Cambridge, we pointed dramatically at Francis Bacon (1561-1626) and his Rerum Organum (1620) in which he argued how natural philosophy should move away from dogmatic reasoning, while instead focusing more on gaining knowledge by means of empirical reasoning. A natural philosopher, as Bacon argued, should closely observe “the works of nature” by carefully producing ‘quality facts’. From deductive knowledge-gaining, to inductive knowledge-gaining, as philosophers would say.

However, in this inductive reasoning, communicating one's individual observations requires thorough and trustworthy communication, as Bacon was perfectly well aware of. As may be unexpected from the founding father of the experimental method, the Cambridge Scholar was very explicit on what should be communicated. In his Rerum Novanum, Bacon writes:

“First the materials, and their quantities and proportions; Next the Instruments and Engines required; then the use and operation of every Instrument; then the work itself and all the process thereof with the times and seasons of doing every part thereof., …

So far, it sounds really familiar to modern readers. But the quote goes on:

… then the Errors which may be committed, and again those things which conduce to make.”

For Utrecht historian of art, science and technology Prof. Dr. Sven Dupré – who pointed us in the direction of Bacon’s quote – this passage shows how the open endedness of the new experimentalist way of communicating, was crucial to the new way of doing natural philosophy. But for the purpose of this talk, we tried to surprise the audience by showing that the father of what we retrospectively call science, emphasized communicating failures as central element of doing good science.

Nevertheless, this line of reasoning enabled us to talk about another hero-of-science, we has been object of research in one of the most sophisticated and inspiring studies in history (and sociology of science): Robbert Boyle (1627-1691). In Leviathan and the Air Pump, Steven Shapin and Simon Schaffer famously explained the debate about the existence of a vacuum in terms of social context. They problematize the idea that by means of experiment the controversy between Robbert Boyle and Thomas Hobbes was not easily settled by the air-pump-demonstrates the former designed. After all, Boyle had to convince his peers that the natural facts he discovered could not be doubted. Therefore, demonstrations in front of peers, so that other natural philosophers could witness Boyle conclusions, were one of the most important rhetorical techniques of his experimental science.

Obviously, also natural philosophers who could not attend these demonstrations, should be convinced, albeit virtually. Schaffer and Shapin show in that regard how, again, communicating science effectively is the key for success. In line with Bacon, Boyle was very explicit in communicating errors in the process of science in the making. In 1660, he writes in his New Experiments what an experimentalist should do when his experimental set-ups malfunction. In case of a leaking air pump, for example, Boyle declares on page 494:

“. . . I think it becomes one, that professeth himself a faithful relator of experiments not to conceal such unfortunate contingencies.”

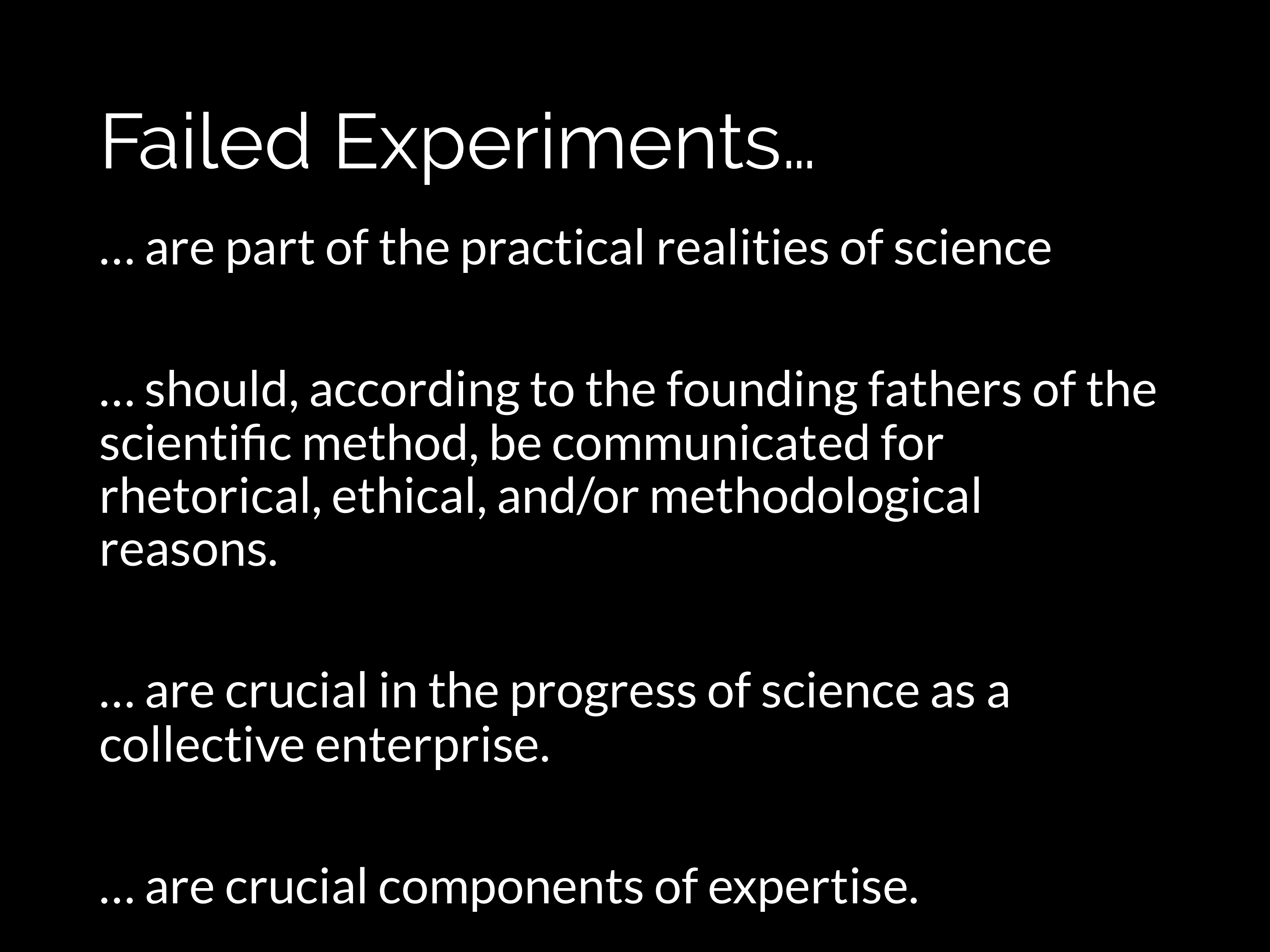

Shapin and Schaffer interpret this explicit writing about failed experiments as having a mainly rhetorical purpose: “On the one hand, it allayed anxieties in those neophyte experimentalists whose expectations of success were not immediately fulfilled. On the other, it assured the reader that the relator was not willfully suppressing inconvenient evidence, that he was in fact being faithful to reality.” Be it for rhetorical reasons, as in Boyle case, be it for normative reasons, as in Bacon’s case, communicating the messy process of doing science has a long history. How, we asked our audience, was that the case today?

The science of trial and error

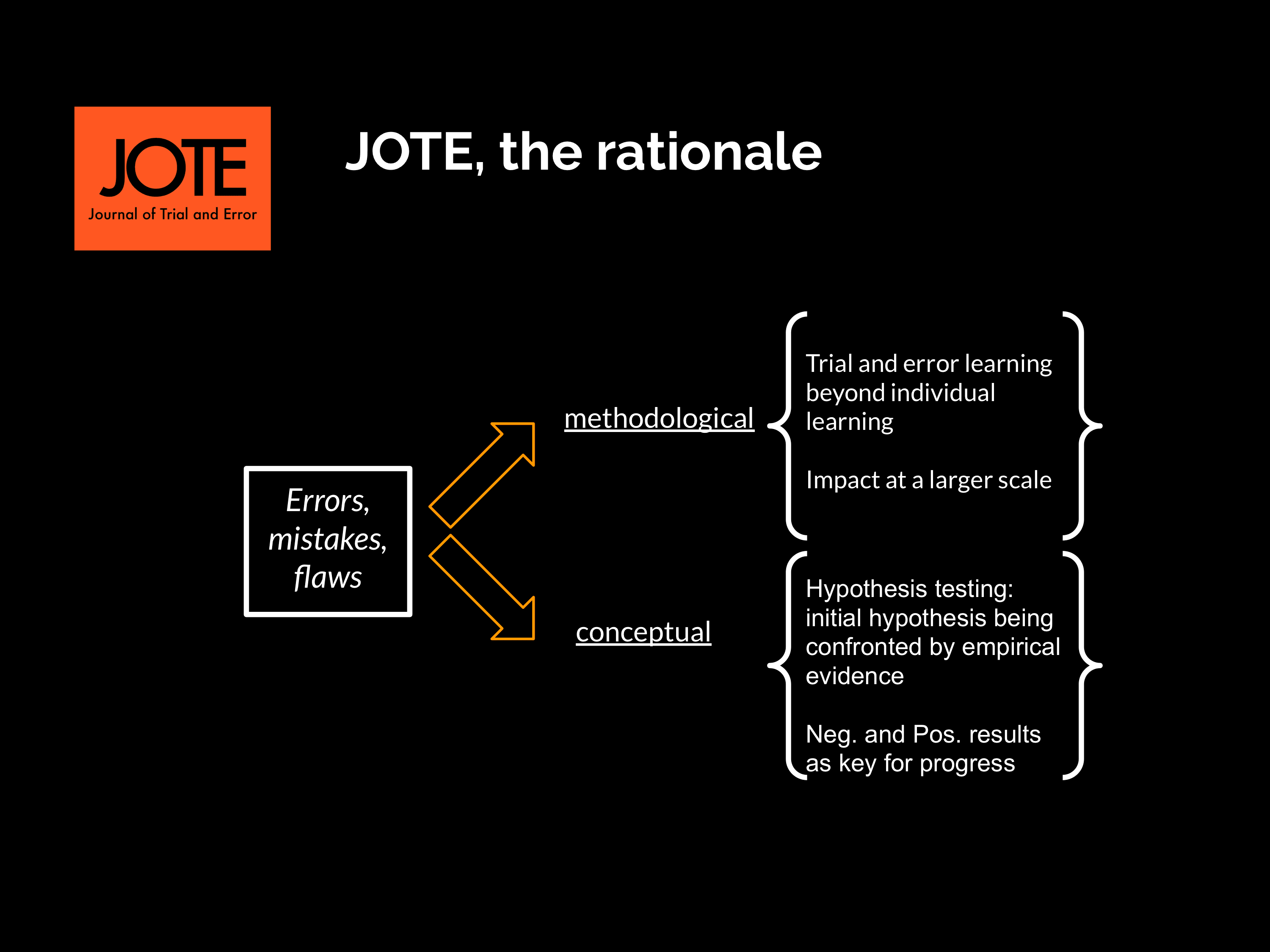

Leaving the historical anecdotes behind, we went on to talk about the present. Our project, embracing the call for transparency of the movements of Open Science (open data, shared resources, collaborative work…), aims to bring into the light those mistakes that happen in the lab. We envision those trial and error processes as coming into two classes: methodological and conceptual.

Methodological mistakes that are of interest for us (and for working scientists as well) are those which tell something about widely shared methods, and that in revealing flaws, advance scientific communities by telling the do’s and don’ts of specific practices. During our talk we emphasised that those mistakes are not those trial and error processes limited to personal learning of the materials and methods; but rather, that there are mistakes showing the individual scientists better uses and limitations of techniques. When talking about these kinds of mistakes in during the coffee breaks in the conferences, scientists of all stages illustrated our principle with more concrete examples, ranging from optimal buffer concentrations to unsuccessful antibodies, from validated animal models to unfit fMRI sequences.

The impression we got from our Cambridge audience was that many labs try to improve and fine-tune a method (what is plainly known as “making it work”), and after months of trial and error, someone at a conference’s coffee break, or over a beer in a pub, tells them: “oh, yeah, that doesn’t work. Try that [input here your specific, favourite lab technique].” What came from the stories of these scientists, telling in excruciating detail their struggles at the bench, was a frustration that the information that they needed was already known. Any scientific niche has a handful of labs around the world, working with similar techniques and theoretical concepts. But not all these knowledge-forms, and especially the hands-on experimental details, are publicly available. Some of the fine-tuning experimental steps are only known by word-to-mouth, and although key factors, they are only known in some labs that are lucky enough to be at the apex of the field and at the frontier of the known.

There is some half-hidden, private knowledge that those labs that work with a specific technique or theoretical scope all seem to know. “Apparently, everybody knows that!”, many PhD’s shouted in pain at the conference. The younger these scientists where, the more baffled they seemed when realising that not all is out there in the open. “It wouldn’t take much to write that up, you know,'' they would comment. A few lines in a paper or a database would do, it would avoid them months of seemingly futile work, but more importantly, limit the amount of resources used, and give them time for “more important stuff.” Aside their personal frustration behind these stories, there seemed to be an underlying ethical worry, regarding the lack of efficiency in the use of (public) funds, and the lack of transparency of certain labs in communicating methodological details which are key to the advancement of science as a group enterprise. This dodgy area of “everybody knows that, but nobody says” in certain fields seemed to us to be directly connected to the current hyper competitiveness in science. Maintaining secrecy of techniques enables labs to keep the upper hand when it comes to publishing. It is all about a race to publication, not even anymore a race to discovery. Let alone, public discovery.

If revealing methodological mistakes (and solutions!) looked like a tough job to communicate in the age of competition, opening the possibility to disclose conceptual mistakes seem to touch upon something even more sensitive. And to begin with, perhaps the idea of ‘conceptual mistakes’ is not the best marketing strategy from our part. But let’s carry on. Our concept of ‘mistake’ isn’t as bad as it sounds. Mistake, error, failure or flaw is aimed to be understood within a dynamic process of trial and error, and as an inherent property of science. At the edge of ‘the known’ we find uncertainty, and so, what else can we expect but to make some conceptual mistakes in our hypothesis, more often than not? A hypothesis being conceptually flawed can only be thought of retrospectively, that is, after the fact of having experimented. By facing up to empirical data, hypothesis get support (positive results) or lack of it (negative results).

The problem arises, when most of the articles out there seem to mostly be comprised of supporting evidence. This problem is known as positive publication bias (you can read about it in a post, in detail in our manifesto, or in our brochure), and is the tendency to submit, accept, and publish preferentially statistically-positive (those that reject the null hypothesis)novel results. Understanding why there is such bias is a complicated task. Why are there more positive results? Are scientists proposing hypothesis ad-hoc? Are they waiting for the right results to appear? Are they fine-tuning methods and theories until they have all figured out with a higher level of certainty? Is there an inherent asymmetry between positive and negative results, which leads to a preference to the former? Is there an institutionalised pressure to disclose only novelty embedded in biomedical promises? What are the incentives to obscure negative results and reframe themes positively? What is the relationship between this publication tendency and grant applications? Is it a practical limitation of time, because negative results aren’t as interesting and writing manuscripts takes time? Or is it, as Francis Bacon suggested, a natural tendency of the mind: “It is the perpetual error of the human intellect to be more moved and excited by affirmatives than by negatives”?

Now, don’t let this avalanche of questions run you over. These are all partial explanations for why scientists publish virtually only statistically positive results. Publication bias is a swamp, and it’s foggy out there. These explanations, ranging from the most pragmatic to the most sociological explanations, are the inhabitants of this swamp. Perhaps the stagnant waters reek of decaying matter, perhaps there are too many flies, infesting bacteria, and invisible quicksandv. We have been trying to identify the dwellers of the swamp, that is, to distinguish what makes scientists conceal their mistakes (so we could change that). But in this search, we have found an even creepier creature. In talking to scientists we have progressively realised that we were standing on something sticky, large, invisible. This swamp is inhabited by a monster that all seem to know, but none seem to dare to awaken.

Getting to know the monster

The project of turning negative results into productive elements of the scientific process may seem like an innocent idea; but while researching on the topic, and especially while in the Cambridge symposium, we came across an intricate network of reasons, interests, institutions, and yes, power, that may complicate things for us. Our idealistic project of ‘making errors count’ early on got confronted by the interest of big publishers, the structure of funding bodies, and the reputation at stake of scientists. While we haven’t actually been confronted directly by these powers, we have been warned time and again that our project wasn’t a small addition to an already complex network of scientific communication; but rather, it seems to disturb (at least theoretically) how the whole thing is built. However, when one talks ‘power’ in science as an institution, one may lack the personal and very specific components of the relationships within the lab. Our experience in Cambridge precisely changed that — it illuminated us into what goes on behind the scenes. It showed us the face of the monster, a bigger monster than we thought we faced.

First of all, we are glad to say that our idea was generally met with enthusiasm. And especially coming from more junior researchers. PhD candidates told us about their experiences, their frustrations, and their disconformity on how things were sometimes run at their labs. The interest in our journal sprouted from their own confrontation with a system that praises novelty and success, and which assigns errors and negative results only a supplementary role. But young scientists seem to be enthusiastic to bring them to the forefront: “It’s a great idea!”; “we really need this!”

It then seemed as though all the ingredients were in place: young scientists believed in the idea, they thought it was worthwhile, productive and necessary. They had their share of examples of how they could contribute. However, while the ingredients were there, the heat was missing. When we proposed if they would be willing to publish an article based on their experimental work that went wrong, they would begin to step backwards. Why?

To understand this, one needs to bring into the mind the fact that laboratories are hierarchical institutions. A word that gets thrown in very often (in a combination of praise, fear, resentment, admiration, and conformity) is “PI”. The PI (Principal Investigator) is the boss; the head of the lab, the one guiding the line of research, allocating projects to people, discussing results and future experiments, and writing and proofreading articles for submission. Most PIs are not dictatorial, and do take into consideration the direction that PhD students and post-docs argue for in their research. However, PI’s do have the last word when it comes to what goes and what does not go into press. Grants are given in respect to the lab’s output (mostly the published articles), thus a tight control exists on what goes ‘public’. Funding bodies will look at the amount of papers and the ranking of the journals in which they are published, and since high-ranking journals typically reject those articles with statistically non-significant results, PI’s will not even try to spend time writing articles that (1) will not count much in receiving future grants, and (2) damage their credibility as a successful researcher.

It is interesting to know that publication bias is mostly a tendency to not submit negative/null results, meaning that these kinds of results will be filtered out mostly at the level of the individual labs (with the PI as the final authority), and not at the level of the editorial boards. Of course, editorial policies that encourage also submissions of statistically non-significant results (such as eLife orPLOS ONE) will help in reversing the publication bias tendency. However, the mere existence of these non-traditional journals seem to have little effect for the PI’s at high profile centers where competition is abysmal. At Cambridge, we heard often that although PhD candidates know of the existence of these journals, and are excited about initiatives like ours, they quickly say, “my PI is only interested in getting Nature’s and Science’s”. The one-word journals (like the previous ones and Cell) are high-ranking journals, and publishing in them will bend the balance of fortune (the grant-giving institutions) towards your lab.

PI’s not only write articles and propose which journals they should go to, but also guide their mentored PhD candidates in what experiments to follow, and how to allocate time and resources. Thus, the ‘power of PI’s’ is not so much direct repression to publish certain kinds of results (negative and null), but a general tendency towards the opposite direction: investing time in generating cutting-edge, unique, successful, grant-giving results. The hierarchical difference between PhD candidate and PI is manifested in the power of the latter in being the time-management of the former. The junior researchers we met at Cambridge were hard-working, devoted scientists, and although much impressed and enthusiastic for our project, they are time-constraint by deadlines and goals – while PI’s are marking the tempo.

In the days of the conference, we went from the “it’s a great idea” to “I don’t dare to be the one stepping up”. We now could translate the fear of PhD candidates to “step up” to their PI, in the more practical of “I don’t have the time”. The issue with negative or null results is that the experimenter will not typically assign the same level of certainty as if it was a positive result. A key question that was asked during our presentation was this: “how can you be sure that a negative is truly negative?” The idea behind this comment is that while a positive result is double and triple-checked, and thoroughly put to the test; negative results are not held to the same level of scrutiny. There seems to be an asymmetry when it comes to the direction of the results. A positive result (for example, protein X is involved with behaviour A) will be assessed through alternative testing method (for example, not only that removal of X leads to absence of behaviour A; but that genetic and physiological evidence points in the same direction, drawing a multilevel network of gene-protein-function-behaviour). However, a negative result (that protein Y is not involved in behaviour A) won’t be assessed so thoroughly. At least in the field of molecular biology, negative results tend to be taken more at face value than are positive results: “if it doesn’t work once, you try again; if it doesn’t work the second time, then that’s what it is.” Scientists won’t be spending time in detailing the ways a mechanism isn’t, and after all, the possibilities are endless: Y is not involved in A, neither in B, C, D…; and behaviour A is not caused by Y, Z, W…

And this is what we think is the key issue in making labs publish their negative or null results: while their content is relevant to the general scientific communities (especially in regards to reliability of meta-analysis), they are hardly published because they do not contribute to the individual laboratories. The bigger monster that we were facing (the power of PIs, time-management, scrutiny) makes us realise that we “are asking scientists to be altruistic, while scientific practice and publishing is a highly competitive race”, as one of the other speakers at the conference told us.

While this conclusion may leave anyone discouraged to fight a system of laboratories-publishers-funding bodies that encourages individualistic science, we do not want to stand passive and surrender. From the point of view of those sciences reflecting on sciences (history and philosophy of science [HPS], or science, technology, and society studies [STS]), we ought to reflect on this issues, and provide solutions to discuss, for example: the implications of a hierarchical organisation in laboratories. It is also on our shoulders (HPS, STS) the burden of proof to find fitting solutions for a situation in science, which is often criticised, and less often tried to improve. From the point of view of the Journal of Trial and Error, we aim to create a platform, where these kind of scholarly traditions, scientists themselves, and editors are put into contact directly in a forum where experimental science and multidisciplinary reflection take place. At Cambridge, it became clear that we are here to help fight the monster of science. Altogether.

Martijn is a historian of science and medicine and works as a lecturer and researcher at Erasmus Medical Centre and Erasmus University Rotterdam. He is also a policy advisor on responsible research at Tilburg University. In 2018, he co-founded the Center of Trial and Error and currently chairs the board.